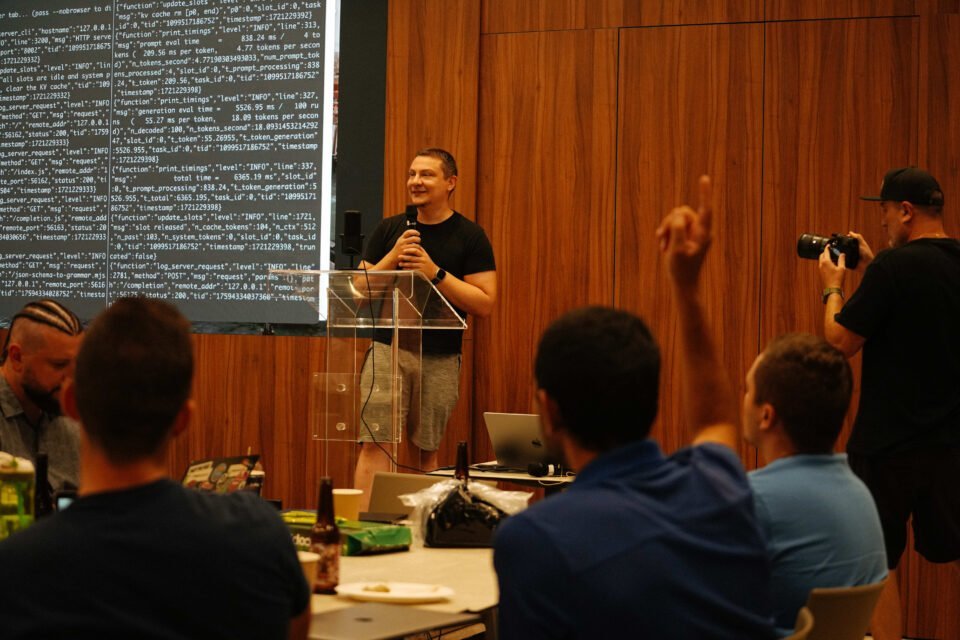

A very interesting discussion organised by AIFoundry, about artificial intelligence, where it should be heading, and the alternative models that use minimum resources took place on Wednesday.

At the offices of BrainRocket in Limassol, machine learning engineers and AI developers, had the opportunity to see in first-hand cutting-edge AI technology and explore groundbreaking topics like running large language models (LLMs) on local machines, mass AI adoption, AI in Rust, and homomorphic encryption in AI.

Follow THE FUTURE on LinkedIn, Facebook, Instagram, X and Telegram

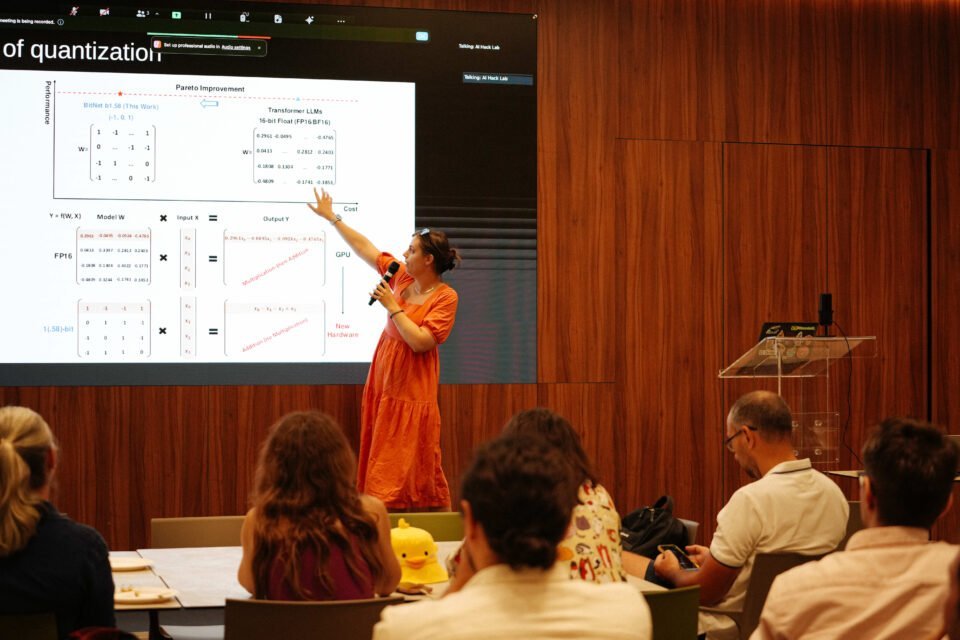

In a room full of people, the event started with the two founders of AIfoundry.org, Tanya Dadasheva and Roman Shaposhnik. AIFoundry.org is a community initiative to create an open ecosystem of shareable practices and technologies for Artificial Intelligence (AI) and machine learning (ML). The goal is to allow everyone to own their AI.

The two of them invited the attendees to question themselves about “Where are we at the scale of things when it comes to AI?” and the answer to that was that the current state of AI is chaotic.

That’s why investors have no clue about what models to invest in when it comes to the technology of artificial intelligence. As they simply put it, “there is a lot for investors to process and understand”.

As they noted, the history of the industry of Artificial Intelligence is quite long and interesting, and therefore the complexity around this subject is kind of a standard part. They also brought up the example of TCP/IP and its complexity back in the early days of development before the internet started taking a more commercial form. “Everybody was looking for something magical to solve the complexity” they highlighted. In our days and talking about AI, this magic lies in Transformers.

Without going into the technicalities, as the two speakers did, we can say that, Transformers are a type of AI model used for processing language. They use an attention mechanism to focus on different parts of the input, understanding the context of each word in a sentence. This allows them to process data in parallel, making them faster and more efficient. This architecture has revolutionized tasks like translation, summarization, and text generation by providing a better understanding of language.

The speakers noted that we are still in the early stages and that there is a need for a chip to make all this possible. But we are close. The tech community has hope for what is coming, and they are searching for a moment that will be the equivalent of the Linux Foundation or RedHat.

The engineers and developers ideally want all the models to exist and architectures, but they don’t want the big-name enterprises, and that’s why investments are not so broad. Also, enterprises that are going to use AI in their procedures must ask questions like what is in the models they are using and what happens with the data.

Here comes the Llamagator

Chris Hasinski demonstrated the capabilities of Llamagator during a presentation by the AIFoundry hacker team, which showcased the innovative web application designed for managing large language models (LLMs). The presentation highlighted the practical functionalities of Llamagator through a live demo, illustrating how users can interact with the application to manage and test various AI models using different prompts. The engaging demo piqued the interest of many attendees, leading to a significant number expressing a desire to join AIFoundry’s Discord server for further discussions and collaboration (https://discord.gg/uqkuz529gk).

Llamagator, built on Ruby on Rails 7.1.3 and utilizing PostgreSQL 14 as its database, is engineered to provide a seamless and efficient platform for LLM management. The application offers a range of features that cater to both novice and experienced AI practitioners. One of the primary functionalities is the ability to create and manage different models and their versions, allowing users to maintain a comprehensive library of AI models within a single interface. This feature supports the development and refinement of various AI solutions by enabling easy tracking and versioning of models.

In addition to model management, Llamagator includes robust configuration management tools that allow users to customize individual parameters for each model and version. This flexibility ensures that models can be fine-tuned to meet specific requirements and performance goals. The application also facilitates the creation of prompts, which are essential for defining the actions or tasks that the models will perform. Users can then execute these prompts using any specified models and model versions, making it straightforward to test and deploy AI solutions.

One of the standout features of Llamagator is its capability to evaluate and compare the results obtained from executing prompts. This functionality enables users to analyze the performance of different models and configurations, providing valuable insights into their effectiveness and suitability for various tasks. By offering tools for detailed evaluation and comparison, Llamagator helps users make informed decisions about which models to use and how to optimize them for better results.

Going into practical

Next on stage was Federico Rampazzo, Consultant at API Plant LTD: Burn Framework and the Rust ecosystem. His speech focused on the use of Rust as a programming language for addressing generic AI problems. He highlighted several key advantages of Rust, including its strong static typing, which ensures more safety and fewer bugs. The ownership system in Rust also contributes to safer and more reliable code. Additionally, Rust’s easy development process and fearless concurrency make it a compelling choice for AI development. The language’s great tooling further enhances the developer experience, making Rust a robust option for AI projects.

Rampazzo shared his personal experiences with both Python and Rust in AI projects. He has successfully executed two generative AI projects in Rust, including one utilizing Retrieval Augmented Generation (RAG). In contrast, his work in Python includes two generative AI projects and one project involving JavaScript/Python for generative AI. This comparative experience underscores the versatility and strength of Rust in handling AI development.

He also discussed the use of Panda and its tools, emphasizing their importance in model inference. For model inference and training, he mentioned the use of Candle and BURN, two powerful tools in the AI development landscape. Rampazzo provided a thorough overview of the available models that developers can use for training AI, ensuring that the audience had a comprehensive understanding of the options at their disposal.

On the stage followed Yulia Yakovleva – Head of AI Research at Nekko.ai: Run LLM on your machine with llamacpp and llamafile. In her speech, she focused on the evolution of models in the AI landscape, particularly before and after 2023. She explained that before 2023, AI models typically required multiple GPUs to operate efficiently. This setup was complex and resource-intensive. However, a significant shift occurred with the advent of LLaMA, a model that became widely accessible after being leaked and made open source.

Yakovleva highlighted the contributions of Georgi Gerganov, who played a pivotal role in the development of 2023. She delved into the technical proposals of that year, emphasizing the breakthrough that allowed the running of large language models (LLMs) with enhanced efficiency. Yakovleva provided a detailed explanation of how memory functions in this new paradigm, shedding light on the technological advancements that have simplified and improved the performance of AI models.

Ioannis Kourouklides, the co-founder of the non-profit foundation Gut-ai.org, addressed the significant challenges faced by many startups in the AI sector. He noted that numerous startups encounter difficulties in developing the AI components necessary for their products. To address this need, Gut-ai.org was established with the mission of releasing AI tools and resources as open source, thereby providing valuable support to the startup community.

Kourouklides shared the foundation’s ambitious roadmap, which includes plans to begin securing funding by the end of 2024. This funding will be crucial in sustaining their efforts to democratize access to AI technology and foster innovation across the industry. Through Gut-ai.org, Kourouklides aims to create an ecosystem where startups can thrive by leveraging open-source AI solutions.

Sergey Sergyenko, VP of Engineering at Nekko.ai, also gave a taste to the crowd about Git blame, and how a tool can learn from git commands plus to hold discussions and create a new model BlameAI.

The event concluded with a hands-on demonstration of various AI models and their capabilities. Participants had the opportunity to observe the technicalities inside a llama file, with the organizers demonstrating how different models work using real-world prompts such as “What is the weather in Cyprus?” These interactive examples showcased the practical applications of AI and engaged the audience in meaningful discussions about the future of AI technology.

Overall, the event provided participants with valuable insights into the latest developments in AI and fostered a sense of community among AI professionals dedicated to advancing the field. The discussions highlighted the importance of open-source initiatives, the potential of new programming languages like Rust, and the transformative impact of recent advancements in AI model efficiency. As the AI landscape continues to evolve, events like this play a crucial role in bringing together experts and enthusiasts to share knowledge, collaborate, and drive innovation forward.